I Fixed My Bittensor Pitch

From bar disaster to working framework

Last month over the holidays, I had a few drinks in a bar with an old school friend.

He’s sharp. Runs his own business. Reads the news.

He had no idea what Bittensor was, so I decided to try my pitch on him that has been refined in the last year.

I won’t run through it all, but it started with “It’s like... a decentralized AI marketplace.”

His face did that thing, the polite nod that means “I have absolutely no idea what you just said.”

I tried to recover.

“Okay, so there are these things called subnets, right? They’re like... specialized AI services that compete for rewards...”

“Rewards in what?”

“TAO. The token. It’s how the network distributes value based on…”

“Hold on a second. What’s a subnet again?”

I pivoted. “Think of it like... validators judge the quality of miners’ work, and then emissions get distributed based on consensus and…”

His pint stopped halfway to his mouth. “Validators? Emissions? Are we talking about AI or pollution control?”

Hurting inside, I tried to pursue it but I knew I’d already lost the battle.

I watched him check his phone. The conversation was over.

We talked about football for the rest of the night.

Later, driving home past closed shops and empty car parks, I replayed the conversation in my head. The pitch I’d practiced a hundred times in the shower, the one that sounded so clear in my own mind had completely fallen apart in reality.

I knew I had a few weeks to fix this before I saw him again.

So I committed to an experiment: test five different Bittensor pitches on five different people, document exactly what breaks, and see if anything actually works.

Here’s what actually happened.

Test #1: My Stock Picking Friend (The Investor)

The Angle: The Mispriced Opportunity

He’s a friend from college who works in finance. The type who bought Tesla in 2020 because he saw asymmetric upside, not ideology.

Over drinks, he mentioned AI investments felt “priced to perfection or obvious trash.”

“Everyone’s piling into AI infrastructure,” I said. “But every dollar reinforces the same three-company oligopoly. What’s mispriced is the alternative.”

He leaned in. “Go on.”

“Everyone assumes decentralized AI can’t work because the economics don’t close. Bittensor TAO fixes that, it’s economic incentives for open AI infrastructure. Run models, validate outputs, then earn TAO. Bitcoin did this for decentralized ledgers. TAO does it for AI.”

My pitch: “TAO is a bet that monopoly AI eventually pisses off enough people that alternatives become valuable. The market’s pricing it like that never happens.”

What happened:

“Show me traction.”

I pulled up Chutes. “Run multiple open-source models side by side. Three dollars a month instead of $100+ for commercial APIs.”

“What’s the bear case?”

“OpenAI drops prices to zero. Governments kill crypto. Decentralized systems never match performance and users don’t care about privacy enough.”

“But if there’s any shift toward decentralized alternatives: regulation, privacy backlash, cost pressure— TAO captures it. It’s the only protocol level economic layer for open AI.”

He nodded. “So it’s a call option on decentralized AI mattering.”

“Yes. And priced like it probably won’t.”

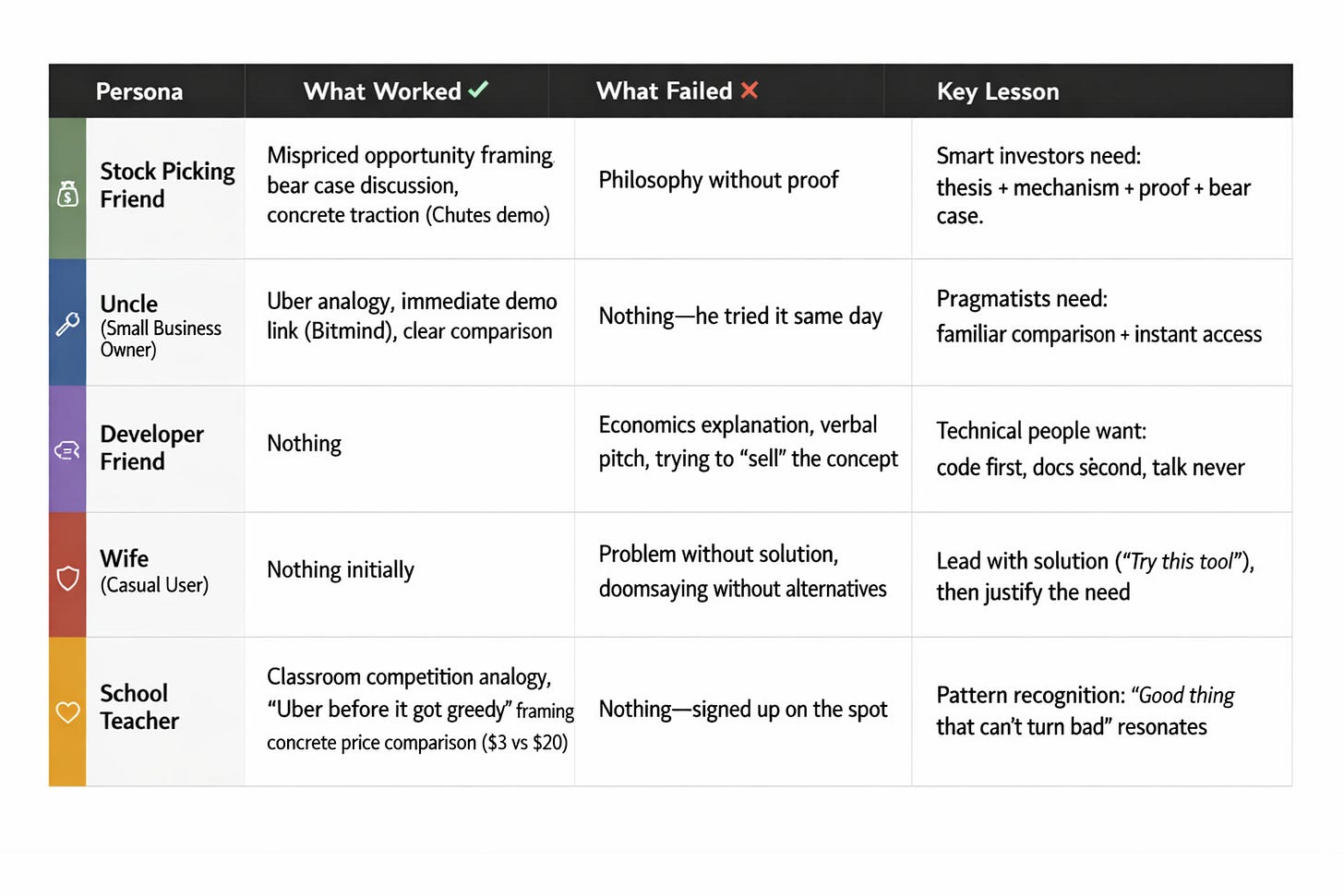

What I learned: Smart investors need the thesis (what’s mispriced), the mechanism (how value accrues), current proof (it works now), and the bear case (what breaks it). Skip the philosophy.

Test #2: My Uncle (The Pragmatist)

The Angle: Bittensor The Machine Economy

He’s a small business owner. Practical. No time for theory. Just wants to know what this thing does.

“Remember calling a taxi after a night out? You’d wait 45 minutes, maybe they’d show up, maybe they wouldn’t. And you paid whatever they charged because they owned the monopoly.

Then Uber happened. Five drivers competing for your fare. You could see their ratings, pick the best one. The marketplace solved the problem.

That’s where we are with AI right now. Except instead of rides, it’s everything.”

The pitch: “It’s like Uber for AI. Instead of one company deciding what model you get, providers compete. The best service wins. You pay less, get more choice and keep your data private.”

What happened:

He got it. Immediately. “So it’s competition instead of monopoly?”

Then he asked: “Can I try it?”

I sent him to Bitmind to test deepfake detection. He used it that afternoon and sent me a screenshot. Win.

What I learned: Pragmatists don’t need philosophy. They need a clear comparison to something they already understand, then a link to try it immediately.

Test #3: My Developer Friend (The Builder)

The Angle: TAO The Economic Primitive

This is where my bar pitch had fallen apart. I’d tried explaining tokens and validators to my school friend, a business owner, not a developer. Wrong audience, wrong approach.

But this friend codes for a living. He knows blockchain basics. I could go slightly technical.

We were grabbing lunch when he asked what I’d been working on lately.

“There’s this decentralized AI network called Bittensor,” I started. “You could run a miner and earn rewards for providing compute.”

His face lit up. “I’d like to see that repo. How does it work?”

Perfect.

The pitch: I started explaining the economic model. TAO flows, validators, consensus mechanisms...

What happened:

His eyes glazed over. I watched him mentally check out.

“You know what, just send me the setup docs,” he said, “I’ll look at it later.”

He didn’t look at it later.

What I learned: Technical people want to see code before they believe you. I should have sent documentation links first and let him read the README. Then, maybe, answered questions about economics if he asked.

Test #4: My Wife (The Skeptic/Casual User)

The Angle: Corporate AI Capture

Last week, my wife used ChatGPT to draft a sensitive work email about a colleague’s performance review. When I mentioned OpenAI was storing that conversation, training their models on it, she looked at me like I’d grown a second head.

“But it’s just helping me write an email.”

Right. Just like Facebook was just helping you stay in touch with friends.

Right now, three companies (OpenAI, Google, Anthropic) control the most powerful AI models in existence. They decide what questions you can ask, what answers you’ll get and what happens to your data.

Every conversation with ChatGPT trains their next model. Every image you generate becomes part of their dataset. The terms of service you didn’t read give them that right.

And when they decide something is “unsafe” or “against policy”—maybe mentioning a competitor, maybe criticizing their approach—you have zero recourse. No appeal. No alternative.

Imagine if three companies owned all the world’s electricity. They’d decide who gets power, when, and at what price. They’d monitor your usage and sell it to your insurance company.

That’s where we are with AI. Except it’s not powering your lights: it’s screening your job applications, diagnosing your medical scans and writing your code.

My pitch: “You know how you don’t trust Instagram with your data? This is that exact problem, but for AI. And the stakes are about ten times higher.”

What happened:

Believe me, this isn’t the first time I’ve tried to talk about Bittensor with my wife.

When I introduced the privacy element, she got worried about using AI at all and asked if she should delete ChatGPT from her phone.

What I learned: I gave her a problem she couldn’t solve. I should have offered her a solution first, “Try this privacy-focused AI tool I use”, then justified the need for it. Otherwise I’m just a doomsayer with no answers.

At least I get the chance try it on her again soon with a different angle.

Test #5: The School Teacher (The Idealist)

The Angle: Open Intelligence

I have a friend who left corporate to become a teacher. He’s the type who tries new apps enthusiastically, then gets disillusioned when they turn exploitative.

At a football game this month: “ChatGPT was amazing for lesson planning, then I read the terms. Every sensitive email about students, every curriculum draft, they’re mining it. And now I hear they’re bringing in ads too.”

Perfect opening.

“So you need AI, but can’t trust the only option that works.”

“Exactly.”

“Let me ask you this: in your classroom, do you let one student answer every question?”

He smiled. “Not at all. They’d get lazy.”

“That’s it, you make them compete. Three hands go up and the best answer wins. That’s the difference between ChatGPT and what I’m about to show you. When you’re in their app, ChatGPT is one company with no competition. What if dozens of AI services had to compete to give you the best lesson plans?”

“That sounds too good to be true.”

My pitch: “There’s a network where AI providers compete for your business. Better service, lower prices, they can’t sell your data. It’s like Uber when it first started, except no one company can ruin it later.”

What happened:

I showed him Chutes. “Three bucks a month, not $20.”

“What’s the catch?”

“Slightly less convenient. But it won’t double in price next year and can’t read your lesson plans to train their next model.”

“So it won’t turn into another Uber.”

“It can’t.”

He handed me his phone. “Sign me up.”

What I learned: He already knows the pattern: good thing gets ruined by monopoly. Position it as “Uber before Uber got greedy, except the greed is impossible.” Then show them it works.

The Pattern I Missed (And You Probably Are Too)

Here’s what I should have seen from the start. Every single failure happened because I used my entry point, not theirs.

Bittensor is building 100+ parallel economies, each with different incentives and users. The mistake most advocates make is having one pitch for a system that deliberately fragments into niches.

Will this work every time?

No. Some people are simply not ready for this conversation and that’s fine.

Bittensor’s adoption will be a grind, not a viral moment. But if you diagnose first and pitch second, you’ll waste less breath and build more believers.

Your Diagnostic Cheat Sheet

Before you pitch Bittensor to anyone, spend 30 seconds asking yourself:

What do they care about most?

Money/Returns: Use the Investor angle (mispriced opportunity, TAO as a bet)

Simplicity/Utility: Use the Pragmatist angle (Uber for AI, competition vs. monopoly)

Control/Privacy: Use the Skeptic angle (corporate capture, data ownership)

Building/Creating: Skip the pitch, send docs and a working example

Values/Fairness: Use the Idealist angle (platforms that can’t rug you)

What’s their technical level?

Non-technical: Analogies only, zero jargon, show them a working product

Technical: Link first, explain later (if ever)

What’s their crypto comfort?

Crypto native: You can mention TAO, emissions, subnets

Crypto skeptical: Hide the blockchain entirely, focus on the outcome

Match the angle to the person. Not the other way around.

Your Turn

I want to know: which of these five pitches would work on someone in your life?

Pick one person. Diagnose them using the cheat sheet above. Try the corresponding angle this week.

Then hit reply and tell me what happened. What questions stumped you? What worked better than you expected? What broke that I didn’t predict?

I’m collecting these stories, the real ones, where things go sideways in ways nobody planned for. That’s where the good insights hide.

Until next time.

Cheers,

Brian

The child test after . Great read Brian 🙏

Brian, this was a great read. You are lucky you have enough “test subjects” to iterate like this. My only real attempt has been trying to explain blockchain networks to my dad. I framed it as a new economic model for producing digital goods and services, where output is produced and verified by multiple independent entities at the same time. Even then, the moment I drifted into mechanism, I lost him. For Bittensor specifically, the only thing that worked for me was simply recommending my brother watch the Incentive Layer documentary and letting it do the heavy lifting. Your takeaway is spot on: start from their entry point, then show something they can try.